Expectation Maximization from scratch in Swift

"The only way to learn a new programming language is by writing programs in it" - Dennis Ritchie

This project work was inspired from Ritchie Vink for his article on GMMs and Alexander Tar thanks to its Swift implementation of statistical library

If you want to test the code on your side, you can go on the github notebook and execute the code in a google colab notebook

The Swift programming language

As the quote mentions, the aim of this project is both to improve my skills in data science by getting head-first in the algorithm we use every day to implement more complex code and also to learn a brand new language getting more and more famous : Swift.

One may wonder why Swift ? When we mention this language we first think about iOS application development so why getting into this language rather than Julia or even the classic ones Java/C++ which are already used in the industry for years ?

To make it short, Swift has 3 advantages that made me choose to get into this language rather than more recent ones (Julia) or older ones (C++):

1 - The language is compiled and offers great performances close to C language.

2 - The readability of the language is great compared to C++ or related.

3 - The support of great industries like Google (with Swift for TensorFlow) and Apple to make improvement in this language compared to more open source languages like Julia which are great but don't have as much support from the industry as the Swift language may have.

To get more information on why this language may be great to learn and have some more details, I'll redirect you to the comprehensive article written by Jeremy Howards on this topic and his projection to improve his future deep learning framework through this language

Gaussian Mixture model

My goal is to give more explainability to the use of data science models. For this project, we are interested in the topic of unsupervised learning. If we are initiated to the machine learning area, the first thing that comes to our mind when talking about machine learning algorithm for unsupervised learning tasks are K-means, Density (DBSCAN) or mean shift algorithms.

The Issue with such topics is about how to give a boudary to the uncertainty of the clusters found by our algorithm ? How uncertain is our result ? Especially in unsupervised learning we need more to give us more confidence if we don't have any labels in our datasets.

We'll expose the problem through the clustering of a gaussian mixture model with the use of Expectation Maximization algorithm.

For the sake of simplicity, this project was made on 1D, in a next project, I'll use a general case with a multivariate distribution but it can take a little more time as it's done in Swift and not in Python.

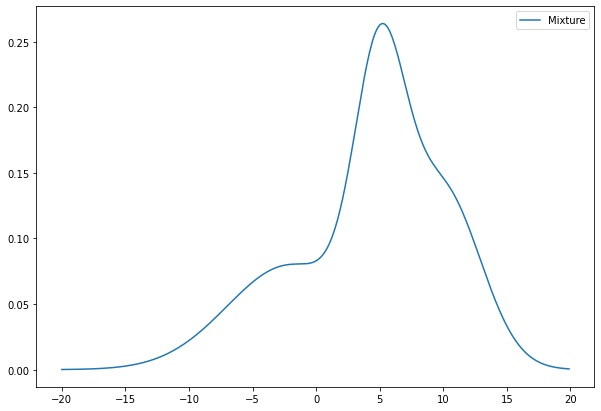

The problem to solve is to decompose a probability distribution with several Gaussian distributions. Here we have an example of mixture model :

This distribution has been created by summing 2 Normal distributions, the aim of the algorithm is to find out these distributions and their parameters. The aim of our GMM is to model a probability distribution with several Gaussian distributions.

The most natural distribution we'll use to decompose this curve with is the Normal distribution, from there, we'll need an algorithm to infer these parameters :

This is where we'll introduce the Expectation Maximization algorithm

We assume a generative process where $X$ is the observed data corresponding to the data we want to fit the model on.

$z_i$ is the hidden random variable (aka latent but let's keep it easy here) that determines from which gaussian $i = \{1,...,m\}$, $m \in \mathbb{N}$

the observed data $x_k$ has been sampled from (where $k \in \mathbb{N}$ is the index of sample of the observed random variable $X$).

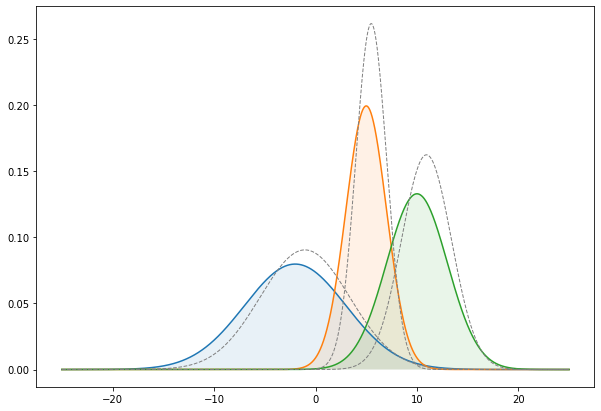

Below you'll see the 3 Gaussian curves from which we created the distribution to fit :

From there, we'd like to know what are the parameters of these gaussian distributions where for each gaussian $i$ :

$$x_k|z_i \sim \mathcal{N}(\mu_i, \sigma_i^2)$$

Where $z_i = \left\{

\begin{array}{ll}

1 & \mbox{if $x_k$ belongs to gaussian $i$} \\

0 & \mbox{otherwise.}

\end{array}

\right. $

then the distribution of $z_i$ would be :

For our example, we'll have 3 gaussians with $m = 3$.

The Swift code for this part :

import Foundation

import Darwin

import Python

%include "EnableIPythonDisplay.swift"

IPythonDisplay.shell.enable_matplotlib("inline")

let plt = Python.import("matplotlib.pyplot")

...

let array_range:Array = Array(stride(from: -25.0, to: 25.0, by: 0.01))

let generative_m_ = [Normal(mean:-2.0 , sd: 5.0),

Normal(mean: 5.0 , sd: 2),

Normal(mean: 10.0 , sd: 3)]

plt.figure(figsize:[10,7])

plt.plot(array_range,array_range.map{generative_m_[0].pdf($0)})

plt.plot(array_range,array_range.map{generative_m_[1].pdf($0)})

plt.plot(array_range,array_range.map{generative_m_[2].pdf($0)})

plt.plot(array_range, zip(zip(array_range.map{generative_m_[0].pdf($0)},array_range.map{generative_m_[1].pdf($0)}).map(+), array_range.map{generative_m_[2].pdf($0)}).map(+), ls:"--",lw:"1",color:"grey")

plt.fill_betweenx(array_range.map{generative_m_[0].pdf($0)},array_range,alpha:0.1)

plt.fill_betweenx(array_range.map{generative_m_[1].pdf($0)},array_range,alpha:0.1)

plt.fill_betweenx(array_range.map{generative_m_[2].pdf($0)},array_range,alpha:0.1)

plt.title("Gaussian mixture model")

plt.show()

You may wonder why we use Swift arrays instead of Python if we still use matplotlib. The reasons are because Python arrays are considered as "Python object" in Swift, so the interoperability is still limited. The second reason being that Swift objects are potentially way faster that Python objects which are just "references" to C code but not "value" objects, if you want more information between the difference between reference vs value types go check this link

Expectation Maximization

The aim of this algorithm is to find a local maximum likelihood or maximum a posteriori (MAP) estimates of parameters in the statistical model (Here a Gaussian mixture model). Our GMM depends on unobserved latent parameters (named $Z$) which are the parameters of the gaussians composing the mixture. And therefore, we'd like to find the optimal values of theses latent parameters to fit the gaussians which makes our model. But as we don't know the number of gaussians, $z_i$ is hidden and unknown so the likelihood has no known equation/known form.For 1 gaussian, the maximum likelihood would be : $L(\theta;X)=L(\theta;x_1,...x_N) = f(x_1;\theta) \times ... \times f(x_N;\theta) = \prod_{k=1}^{N} f(x_k;\theta) = \prod_{k=1}^{N} p(x_k|\theta) $

with : $\theta = \{\mu, \sigma \}$ being shortcut for the parameters to estimate.

Yet here we clearly have a multimodal distribution so just solving the maximum likelihood would not fit our observed data very well.

In order to fit it, we need to solve the values of another random variable $Z$ which would give us the number of gaussians and find its associated parameter $\phi$

From there, our set of parameters to optimize for the solving of maximum likelihood becomes : $\theta : \{ \mu, \sigma, \phi \}$

And our likelihood function becomes : $L(\theta;X,Z) = P(X,Z|\theta)$

To find the maximum likelihood estimate, we'll then maximize the marginal likelihood of the observed data : $L(\theta;X) = p(X | \theta) = {\displaystyle \int }p(X,Z|\theta)dZ$

Where $L(\theta;X) = P(X | \theta) = {\displaystyle \prod_{k=1}^{N} }P(x_k|\theta) = {\displaystyle \prod_{k=1}^{N} } {\displaystyle \int_{z} }p(x_k, z | \theta) dz = {\displaystyle \prod_{k=1}^{N} } {\displaystyle \int_{z} }p(x_k| z, \theta)p(z|\theta) dz$ In our case, $Z$ is discrete so we'll rewrite the equation : $$L(\theta;X) = {\displaystyle \prod_{k=1}^{N} }{\displaystyle \sum_{i=1}^{m} }p(x_k|z_i, \theta)p(z_i|\theta) $$ And if we take the log of the expression, we get the log-likelihood expression we want to maximize : $$l(\theta;X) = log(L(\theta;X)) = {\displaystyle \sum_{k=1}^{N} }log ({\displaystyle \sum_{i=1}^{m} }p(x_k|z_i,\theta)p(z_i|\theta))$$ From this expression we know :

Yet, we don't know much on our gaussians! What are the true parameters $\theta$ for each gaussians $z_i$ ? Parameters also depends on the observation $X$.

In order to solve this issue the intuition is the following, we'll loop for $i = 1..n$ (number of gaussians) :

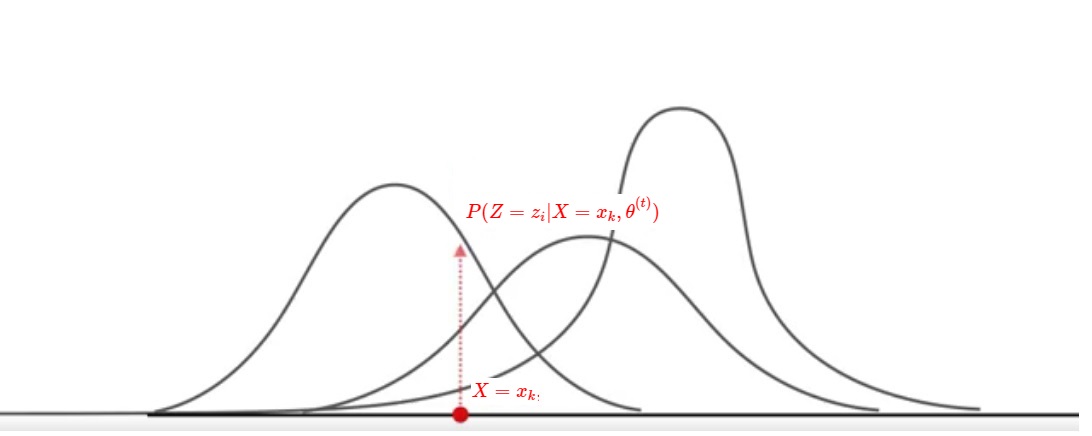

1 - We weight the GMM likelihood by multiplying it by $P(Z = z_i | X = x_k, \theta^{(t)})$, the gaussian distribution $z_i$ conditioned by our observations $x_k$ and parameters $\theta$

2 - We calculate the mean of the "weighed likelihood" with conditional expectation $E_{Z|X,\theta}[...]$ which will correspond to a (local) maximum because the log-likelihood is a concave function.

3 - With the knowledge of the precedent values, we update the parameters values $\theta^{(t)}$ at iteration $t$ for the gaussian $z_i$ until one or several parameter of $\theta^{(t)} \leq \theta_{new} + \epsilon$ with a manually configured threshold $\epsilon$ which will define the "convergence" of the sampling of the gaussian parameters

Then loop to the next $i$

We can illustrate what we said before with the following illustration which shows for 1 loop at 1 point $x_k$ :

We'll use the EM algorithm to infer the value of the hidden random variable $Z$ (in other words, infer how probable is each gaussian $z_i$ with the parameters $\theta$ on the observed data $x_k$) through an iterative procedure by changing the parameter's values $\theta$ at each iteration :

Expectation step:

"What is the likelihood the selected gaussian is the a priori $i$-th one knowing the observed data $x_k$ for a fixed set of parameters $\theta$ ?" or

"What is the a posteriori probability the gaussian selected is the $i$-th one conditioned on the observed data $X$ and the fixed parameters $\theta$?"

Maximization step:

Swift in action

After some painful theory, we have implemented the algorithm in Swift just below :

public struct EM {

var k: Int

var mu: [Double] = []

var std: [Double]

var w_ij: [[Double]]()

var elements: [Double] = []

var phi: [Double] = []

var tot_dims: [Double] = []

var sum_ax1: [Double] = []

init (_ k: Int){

self.k=k

self.std = [Double](repeating:1, count: self.k)

self.phi = [Double](repeating:1, count: self.k).map{$0 / Double(self.k)

self.mu = [Double](repeating:0, count: self.k).map{$0 / Double(self.k)

}

public mutating func expectation_step(_ x:[Double]) {

self.elements = [Double](repeating:0, count: x.count)

self.tot_dims = self.elements

for z_i in 0..<self.k {

self.w_ij.append(self.elements)

self.w_ij[z_i] = x.map{Normal(mean: self.mu[z_i], sd:self.std[z_i]).pdf($0)}

self.w_ij[z_i] = self.w_ij[z_i].map{ $0 * self.phi[z_i]}

}

for row in 0..<self.elements.count {

for column in 0..<self.w_ij.count {

self.tot_dims[row] = self.w_ij[column][row] + self.tot_dims[row]

}

}

for column in 0..<self.w_ij.count {

for row in 0..<self.elements.count {

self.w_ij[column][row] = self.w_ij[column][row] / self.tot_dims[row]

}

}

}

public mutating func maximization_step(_ x:[Double]) {

self.sum_ax1 = self.elements

for i in 0..<self.k

self.phi[i] = self.w_ij[i].average() {

//Maximization of mu : (self.w_ij * x).sum(1) / self.w_ij.sum(1)

self.mu[i] = ((0..< x.count).map{self.w_ij[i][$0] * x[$0]}).reduce(0,+)

self.mu[i] = self.mu[i] / self.w_ij[i].sum()

//Maximization of std : self.std = ((self.w_ij * (x - self.mu[:, None])**2).sum(1) / self.w_ij.sum(1))**0.5

self.sum_ax1 = (0..< x.count).map{self.w_ij[i][$0] * pow((x[$0] - self.mu[i]),2)}

self.std[i] = self.sum_ax1.reduce(0,+) / self.w_ij[i].sum()

self.std[i] = pow(self.std[i], 0.5)

}

}

public mutating func fit(_ x:[Double]) {

self.mu = Uniform(a: x.min()!, b:x.max()!).random(self.k)

self.elements = [Double](repeating:0, count: x.count)

for i in 0..<self.k {

self.w_ij.append(self.elements)

}

var last_mu:[Double] = [Double](repeating:Double.infinity, count: self.k)

var diff_bools: [Bool] = [Bool](repeating: false, count:self.k)

for i in 0..<self.k {

diff[i] = last_mu[i] - self.mu[i]

if testNearZero_(diff[i]) { diff_bools[i] = true }

else { diff_bools[i] = false }

}

while !diff_bools.allSatisfy({$0 == true}) {

last_mu = self.mu

self.expectation_step(x)

self.maximization_step(x)

for i in 0..<self.k {

diff[i] = last_mu[i] - self.mu[i]

if testNearZero_(diff[i]) { diff_bools[i] = true }

else { diff_bools[i] = false }

}

}

}

}

var obj = EM(3)

obj.fit(x_i)

We can look at the final fit by using the fitted parameters for our gaussians once the EM algorithm has converged :

let fitted_m = [Normal(mean:obj.mu[0] , sd: obj.std[0]),

Normal(mean:obj.mu[1] , sd: obj.std[1]),

Normal(mean:obj.mu[2] , sd: obj.std[2])]

plt.figure(figsize:[10,7])

plt.plot(array_range,array_range.map{generative_m_[0].pdf($0)})

plt.plot(array_range,array_range.map{generative_m_[1].pdf($0)})

plt.plot(array_range,array_range.map{generative_m_[2].pdf($0)})

plt.plot(array_range,array_range.map{fitted_m[0].pdf($0)}, lw:1,ls:"--",c:"grey")

plt.plot(array_range,array_range.map{fitted_m[1].pdf($0)}, lw:1,ls:"--",c:"grey")

plt.plot(array_range,array_range.map{fitted_m[2].pdf($0)}, lw:1,ls:"--",c:"grey")

plt.show()

Not too badly, we can see our parameter search reasonably fit the true gaussians of our data. The dashed curve are the "fitted" gaussians with the parameters found through EM algorithm.

There are a few limitations we mentionned that we can sum up to as a conclusion :